There was no universe in which Tricia was not obsessed with flight. In tandem with her obsession came its inevitable, plentiful opposition: a cavalcade of obstacles meant to prevent her ascent to the skies. These would often manifest in the forms of previously innocuous people suddenly turned mortal enemies, or perfect storms of inconvenient circumstance, yet always seemed so neatly arranged before her that they ended up clearly delineating the upward path they were seemingly meant to dissuade her from taking in the first place. Forthright, unshakeable, and possessed as she was of a greater patience than most could comprehend, time and again, however improbably, she would make her way up.

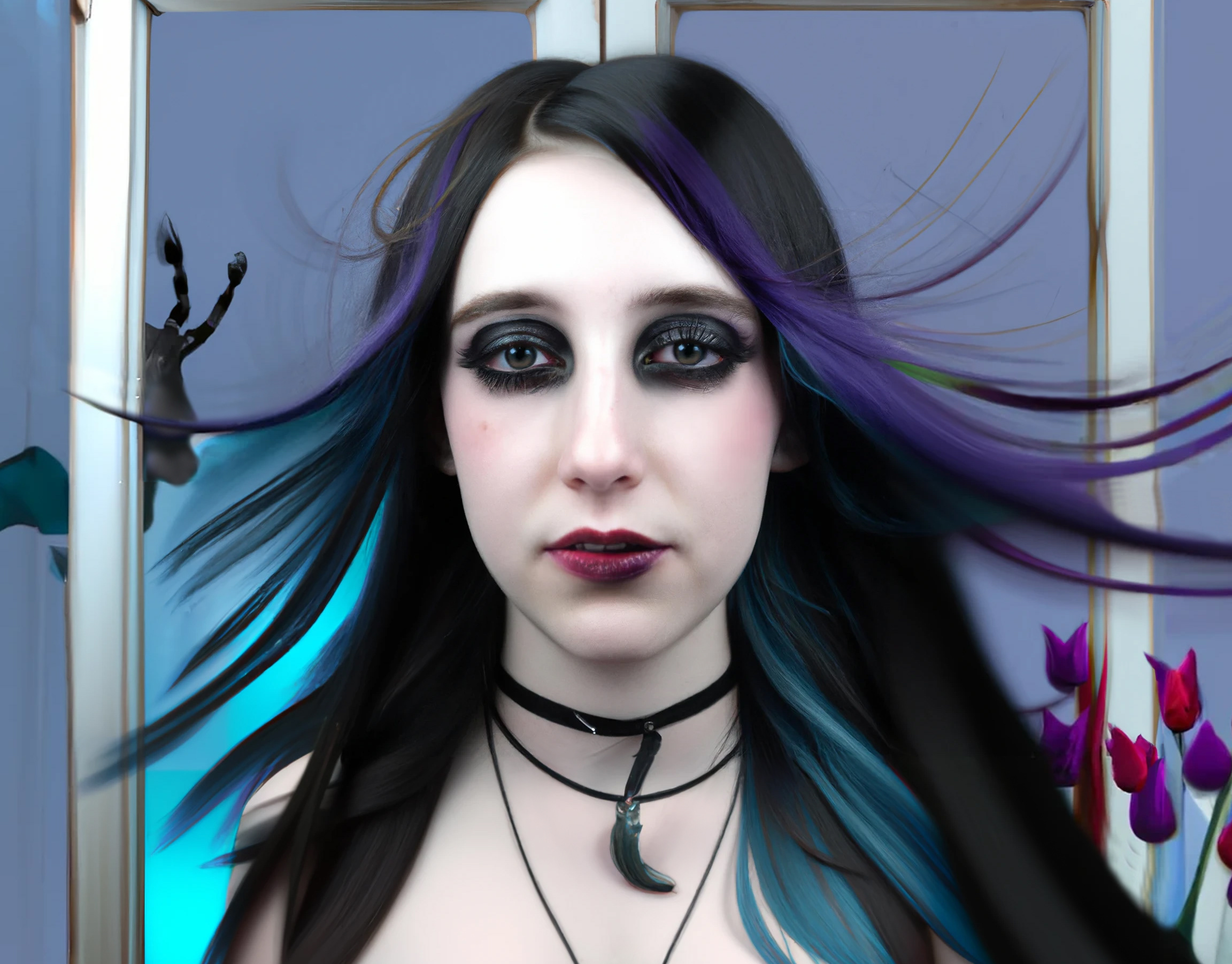

Tricia 53A679

Tricia 53A679 (LVL-1~)

Tricia 53A679 (LVL0)

Tricia 53A679 (LVL1~)

Tricia 53A679 (LVL2~)

Tricia 53A679 (LVL3)

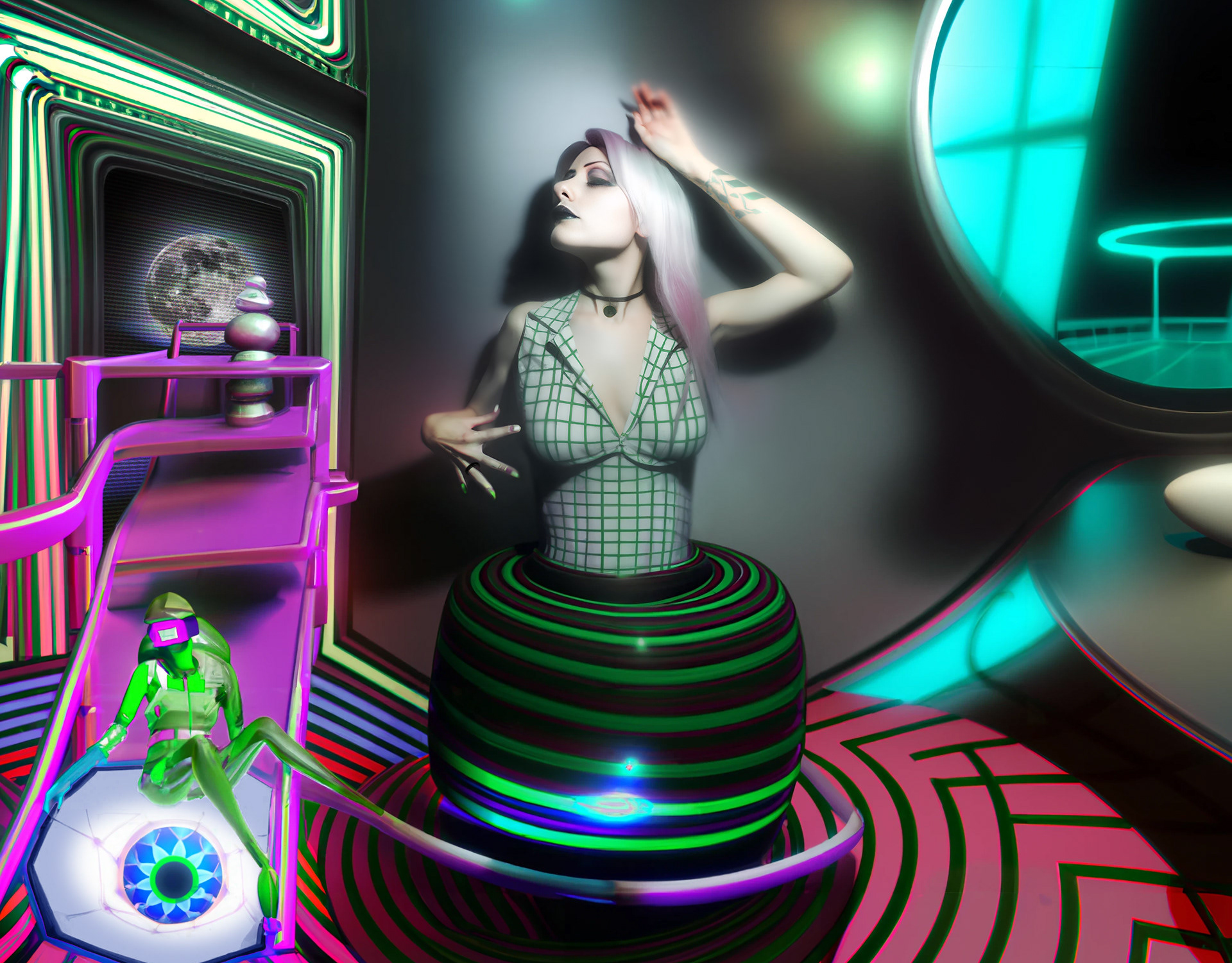

Tricia D752CA

Tricia D752CA (LVL0)

Tricia D752CA (LVL1)

Tricia D752CA (LVL2)

Tricia D752CA (LVL3)

Tricia D752CA (LVL4)

Tricia 6F13ED

Tricia 6F13ED (LVL-1~)

Tricia 6F13ED (LVL0)

Tricia 6F13ED (LVL1)

Tricia 6F13ED (LVL2)

Tricia 6F13ED (LVL3)

About My Process

It's probably not a surprise that creating images with an AI-assisted workflow would be a process that is constantly evolving. What's been surprising to me is how much of that evolution has been a result of my dissatisfaction and/or frustrations with the current iterations of almost every one of these generative engines. Luckily, there are enough of them that whenever one fails to produce results I find suitable, I can usually figure out some way of integrating another.

For Tricia's image set, I started with faces produced in Midjourney, then performed a few "zoom out" maneuvers in a row on each variation of the face. This is Midjourney's version of "outpainting," or extending an image: adding additional (ideally contextually appropriate) content around the frame of the original image. At this point in time, Midjourney had not yet introduced an editor that would allow me to upload any external images, so as soon as I downloaded its generated results and took them into Photoshop, Midjourney could be of no further use (they've since corrected this glaring omission from their feature set).

Once set up in Photoshop layers and tweaked to my satisfaction, I exported and uploaded the images to mage.space (an online service running Stable Diffusion/SDXL under the hood, with a much more lenient/convenient content filter than most other such engines). Using these as image prompts, and carefully adjusting the weight of the image versus the text prompts, I could effectively use Stable Diffusion as an AI upscaler, to increase (and often also correct) areas requiring greater detail. This usually includes the face and hands, but the same technique can be applied to any portion of the image, as long as one takes care to keep the variability/prompt influence set low enough so that the newly generated content can be easily masked/blended/Photoshopped back into the main image, to the point that it generally becomes impossible to tell that different generations/generators are responsible for different sections of imagery. In this way, I would gradually zoom back in toward the original face, until all crops/magnifications were looking good to go.

Occasionally, I can bypass this process using Photoshop's integrated AI, either with "generative fill" or the remove tool, but its results are often not quite on the same level as Midjourney or other external engines, particularly when dealing with human features and/or larger or more complex areas of imagery. And there's also the tendency for Firefly-powered results to resemble stock imagery, an understandable side effect of its generative model having been trained on Adobe's massive proprietary library of stock images.

Notably, the "main" face in this set of image sets, Tricia 53A679, contains the first inhumantouch contribution from DALL-E 3: the rather majestic flying horse.